We all know that Artificial Intelligence is an emerging technology, it is becoming the new normal. It comprises of a wide range of disciplines, such as Data Science, Machine Learning, Business Intelligence and so on. Today AI is applied in almost every domain around us.

In this blog, I'm going to tell about some top 3 key terminologies you should be knowing, if you are exploring about AI. So, let's get started!

1. AutoML

Sometimes, we may feel overwhelmed by the vastness of AI, wondering how to learn and use it for our application. Even if we don't know AI, we can use AI technology in our applications.

Surprised? Feeling How is it possible?

Yes. It has become possible by AutoML.

Automated Machine Learning, abbreviated as AutoML, is a process through which we can automate applying Machine Learning to real - world applications. It is an Artificial Intelligence - based solution, proposed for easing the usage of machine learning to different domains, to improve the efficiency of machine learning.

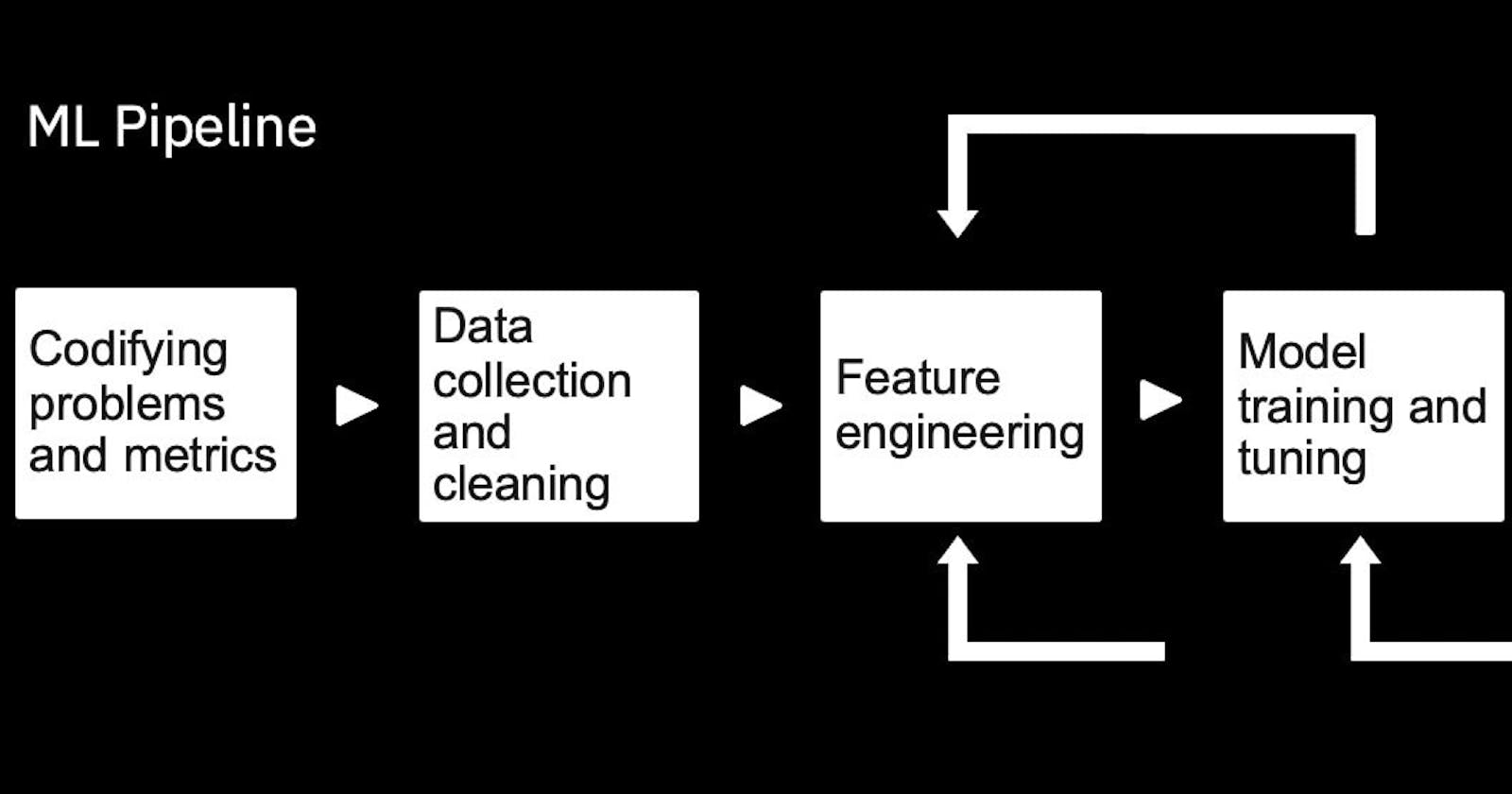

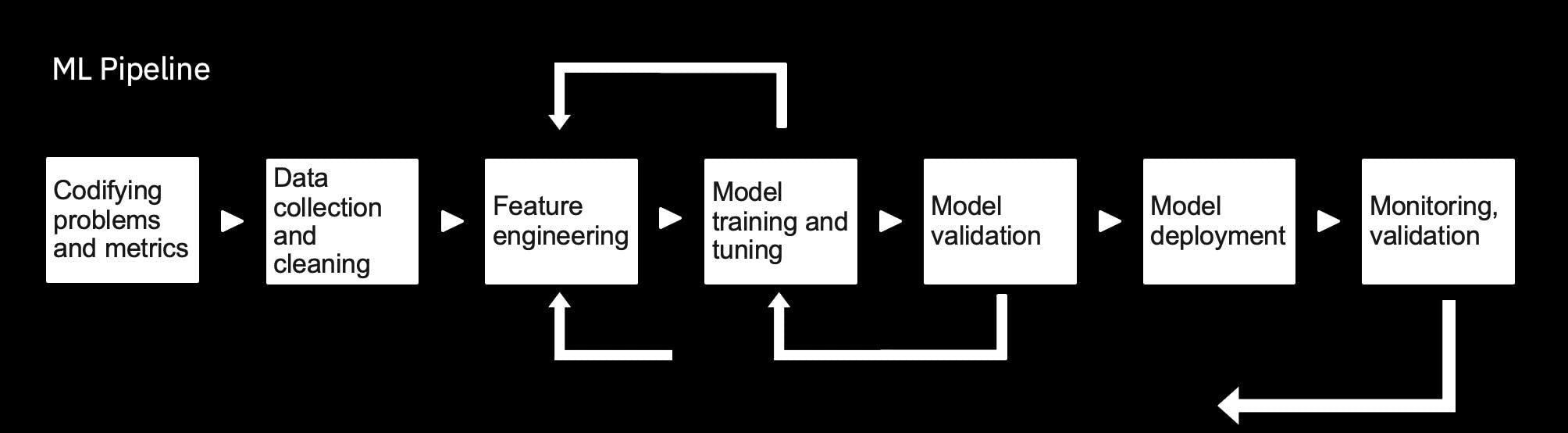

A typical machine learning pipeline involves many phases like:

- Data Preprocessing

- Feature Selection

- Selecting Appropriate Model for the feature

- Hyperparameter Tuning

- Model validation

- Post-processing the models

- Analysis of obtained results

In order to perform all these tasks, machine learning expertise is required. But due to the virtue of AutoML, these tasks can be automated and applied end-to-end. It helps in people who are not experts of machine learning to actually make use of it.

Advantages:

- Less expertise required, everything is off-the-shelf

- Time-saving, since no need to build the external infrastructure around the models

- Fastens model development by removing unnecessary data science works, since pre-trained custom models are used.

Disadvantages:

- Inflexible, cannot be used when models need to undergo rapidly changing requirements. If a change occurs, a completely new custom model needs to be built.

- Sometimes we may not know why the model is not working as expected, since we lack the expertise, leading to less insights on data.

- It contains only generalized models, which should be adapted to our own requirements. However, the quality of the model may be less compared to specialized models.

2. GANs

We have heard of creating fake emails, fake credit cards, and even fake products as well. But have you heard of creating fake person?

Surprised again?

Yes, it is possible, through GANs.

Wanna try it? Visit this link.

Generative Adversarial Networks, abbreviated as GANs, are generative models, that can generate new data instances based on the training data provided. It performs unsupervised learning by finding out different patterns in the input data, and learning them. It uses that knowledge to generate new data, that has been extracted from the original dataset.

For example, we can generate a new unknown face by combining different parts of face from different images of different people, just like how we have some parts of our face resembling our father, some resembling our mother and so on. Maybe god is using GANs, lol ;))

GANs usually have two parts:

- Generator model

- Discriminator model

A Generator model is used to train the model with data to generate new examples, and a Discriminator model is used to classify or discriminate the examples into real, which are there in the dataset or fake, which are completely new.

This model is called adversarial, since both generator and discriminator models work hand-in-hand, even though they are opposite of each other. As long as the generator generates new data, the discriminator tries segregating them into real or fake. At a point of time, it starts believing that fake itself is real.

GANs are a trending and amazing domain in Artificial Intelligence, that can be used in various applications like:

- Image translations

- Generating Realistic objects

- Photograph Editing

and so on.

3. Cognitive Computing

Even though computers are faster than we human beings, they are capable only for processing and calculation. They couldn't behave like a human being, since they couldn't perform some complex tasks like object recognition, language interpretation and so on.

This led to the birth of Artificial Intelligence, and to Cognitive Computing.

Cognitive Computing is a step to make computers work and behave like a human brain. It uses self-learning and reinforcement learning methods, to learn from the surroundings and the situations, just like how we learn things. They use different technologies like pattern recognition, Natural Language Processing, Data mining and so on to achieve it.

Cognitive Computing helps in performing cross-references between structured and unstructured data, leading to high accuracy in analysis. It is of utmost help when large datasets need to be analysed.

Using cognitive assistants like Chatbots, personalised recommendation systems and behavior predictions can be performed, which can improve customer experience and interaction with the business system.